Distinguishes between the negative and positive classes, or specifies which classes to include in the data.

#MATLAB 2009 GRID ALPHA SOFTWARE#

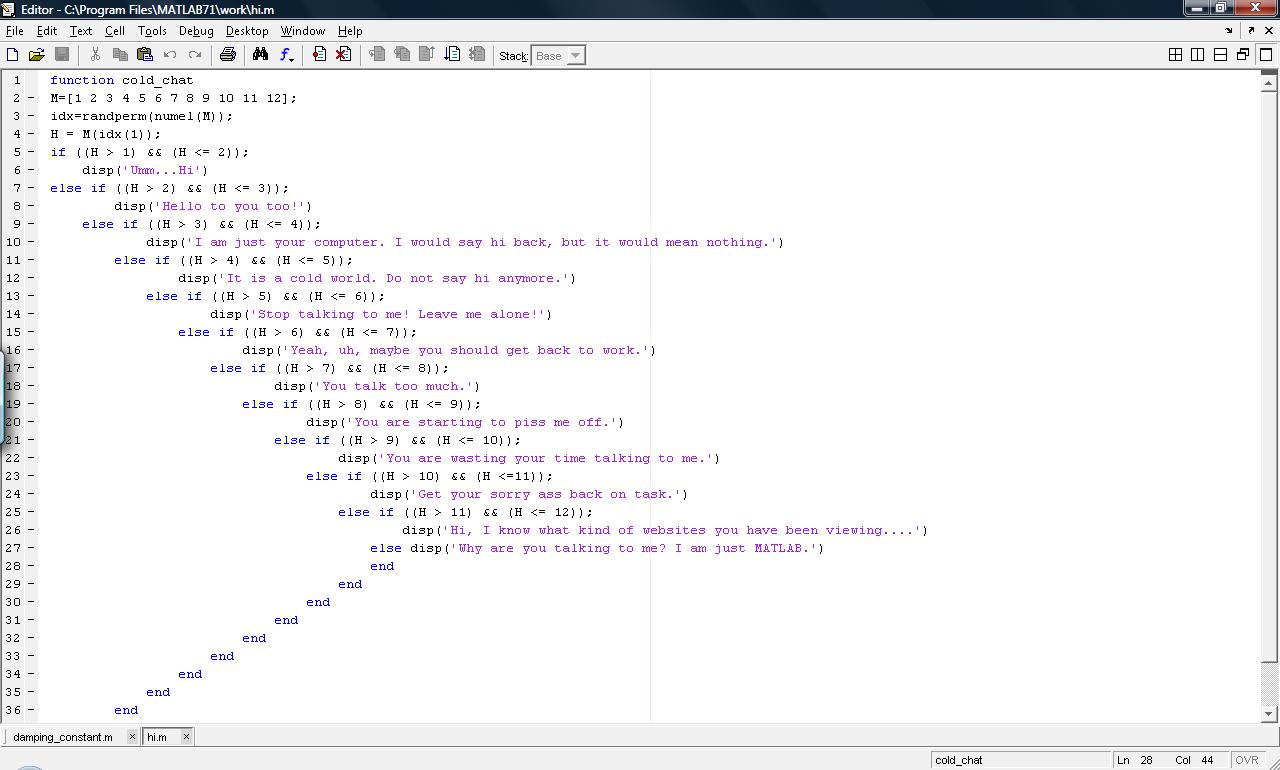

Flag indicating whether the software should standardize the predictors before training the classifier. An important step to successfully train an SVM classifier is to choose an appropriate kernel function. The value 'gaussian' (or 'rbf') is the default for one-class learning, and specifies to use the Gaussian (or radial basis function) kernel. The default value is 'linear' for two-class learning, which separates the data by a hyperplane. Y can be a categorical, character, or string array, a logical or numeric vector, or a cell array of character vectors. Array of class labels with each row corresponding to the value of the corresponding row in X. Matrix of predictor data, where each row is one observation, and each column is one predictor. Train, and optionally cross validate, an SVM classifier using fitcsvm. In addition, to obtain satisfactory predictive accuracy, you can use various SVM kernel functions, and you must tune the parameters of the kernel functions.Ĭlassifying New Data with an SVM Classifier Use the trained machine to classify (predict) new data. Using Support Vector MachinesĪs with any supervised learning model, you first train a support vector machine, and then cross validate the classifier. The resulting classifiers are hypersurfaces in some space S, but the space S does not have to be identified or examined. Therefore, nonlinear kernels can use identical calculations and solution algorithms, and obtain classifiers that are nonlinear. All the calculations for hyperplane classification use nothing more than dot products. The mathematical approach using kernels relies on the computational method of hyperplanes. For details, see Train SVM Classifier Using Custom Kernel. Instead, you can define the sigmoid kernel and specify it by using the 'KernelFunction' name-value pair argument. Not every set of p 1 and p 2 yields a valid reproducing kernel.įitcsvm does not support the sigmoid kernel. This approach uses these results from the theory of reproducing kernels:

For those problems, there is a variant of the mathematical approach that retains nearly all the simplicity of an SVM separating hyperplane. Some binary classification problems do not have a simple hyperplane as a useful separating criterion. For more details, see Quadratic Programming Definition (Optimization Toolbox). quadprog uses a good deal of memory, but solves quadratic programs to a high degree of precision. įor one-class or binary classification, and if you have an Optimization Toolbox license, you can choose to use quadprog (Optimization Toolbox) to solve the one-norm problem.

#MATLAB 2009 GRID ALPHA SERIES#

Unlike SMO, ISDA minimizes by a series on one-point minimizations, does not respect the linear constraint, and does not explicitly include the bias term in the model. Like SMO, ISDA solves the one-norm problem. įor binary classification, if you set a fraction of expected outliers in the data, then the default solver is the Iterative Single Data Algorithm.

During optimization, SMO respects the linear constraint ∑ i α i y i = 0, and explicitly includes the bias term in the model. SMO minimizes the one-norm problem by a series of two-point minimizations.

For one-class or binary classification, if you do not set a fraction of expected outliers in the data (see OutlierFraction), then the default solver is Sequential Minimal Optimization (SMO).

0 kommentar(er)

0 kommentar(er)